Smarter AI Workloads Ahead

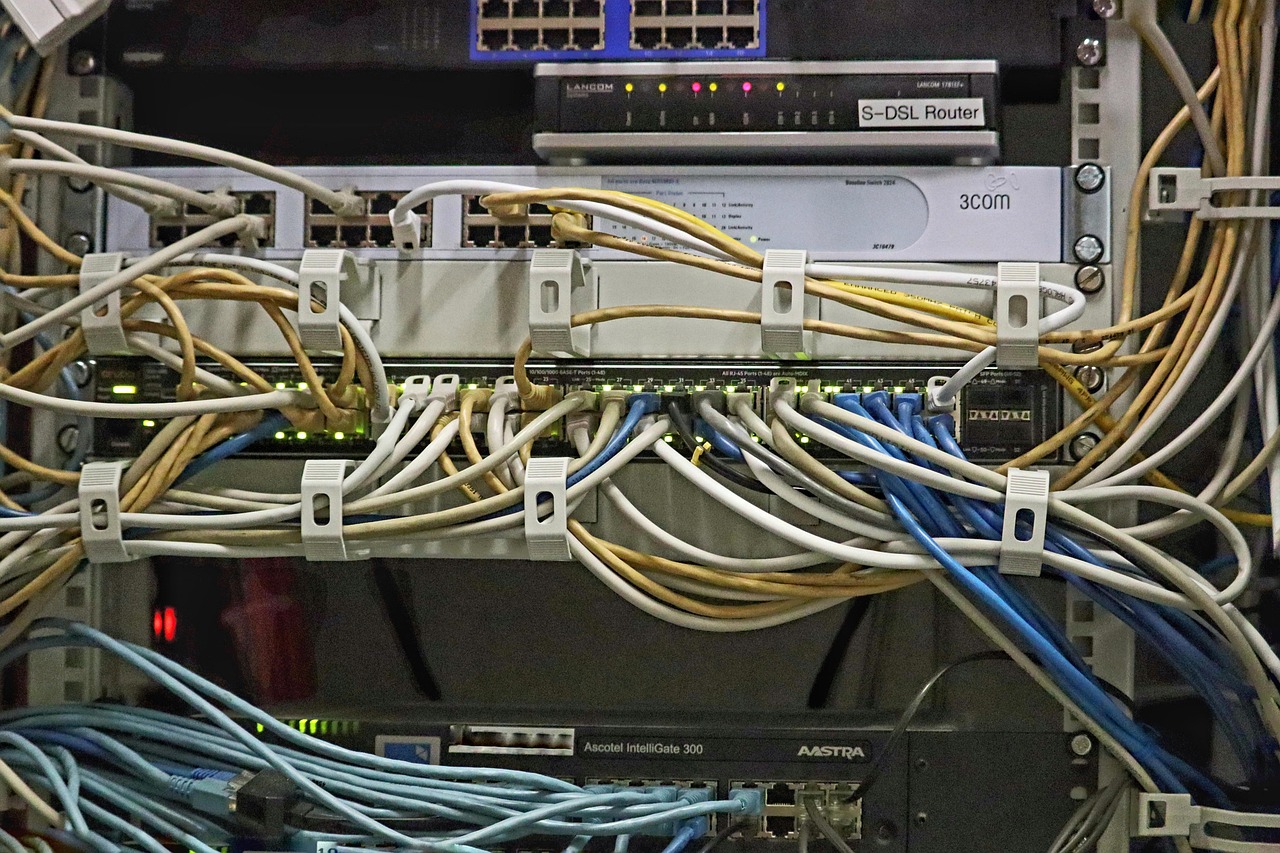

Let’s cut to the chase: if you’re running Apache Spark jobs on Kubernetes, you know it can get messy real quick. As workloads scale, performance issues start popping up like weeds in a garden. But don’t sweat it—there’s a new toolkit in town that promises to optimize your Spark deployments, and it’s backed by some solid benchmarking results. Recently, the folks at Kubeflow unveiled the Kubeflow Spark Operator Benchmarking Results and Toolkit. This isn’t just some light reading; it’s a comprehensive framework designed to help you make sense of your Spark jobs when they start to pile up. We’re talking about tackling the big issues—like CPU saturation, API slowdowns, and job scheduling headaches. And let’s be honest, who hasn’t banged their head against the wall trying to get those jobs to run smoothly?

The Lowdown on Performance Bottlenecks

Now, why should you care?

Because ignoring these performance roadblocks can cripple your efficiency. For example, when the Spark Operator is CPU-bound, it can limit how many jobs you can submit at once. Trust me, nobody wants to be stuck waiting for a job to kick off only to realize the controller pod is maxed out. And here’s another kicker: high API server latency can degrade as workloads ramp up. You’re left staring at slow job status updates, which can throw a wrench in your observability efforts. Plus, there’s that pesky webhook overhead that adds about 60 seconds of delay to each job. So, if you’re running thousands of jobs, any delays can pile up faster than your laundry after a busy week.

Solutions That Work

What’s the fix?

The benchmarking results provide a treasure trove of actionable recommendations. Here’s what you should consider:

1. Deploy Multiple Spark Operator Instances: One instance often can’t keep up with high job submission rates. By distributing workloads across multiple namespaces, you can reduce the bottleneck and ensure a smoother operation. 2. Disable Webhooks: If you can, ditch the webhooks that slow down job starts. Instead, define Spark Pod Templates directly in the job definitions to save time. 3. Increase Controller Workers: The default is 10, but if you’ve got the CPU power, bump that up to 20 or even 30 for better throughput. More workers mean faster job execution. 4. Use a Batch Scheduler: The default Kubernetes scheduler isn’t great for batch workloads. Tools like Volcano or YuniKorn can help schedule jobs more efficiently, so give them a whirl. 5. Optimize API Server Scaling: As workloads grow, make sure your API server can handle the load. Scaling up replicas and resources can make a huge difference. 6. Distribute Spark Jobs Across Namespaces: Running too many jobs in one namespace can lead to failures due to overload. Spread those jobs around to keep everything running smoothly. 7. Monitor with Grafana: To really keep your finger on the pulse, use the open-source Grafana dashboard that comes along with the toolkit. It’ll help you visualize job processing efficiency and system health in real time.

The AI Connection with Katib

Now, while optimizing Spark workloads is essential, we can’t ignore the world of AI and machine learning, which is evolving faster than a speeding bullet. Enter Katib, a hyperparameter tuning tool that’s become a game changer for machine learning pipelines. As AI models get more sophisticated, optimizing their performance is no longer optional; it’s a necessity. Katib automates the tedious process of hyperparameter tuning, which can take forever if done manually. Whether you’re working with Retrieval-Augmented Generation (RAG) pipelines or any other complex ML models, Katib allows you to fine-tune parameters for better accuracy and results.

Getting Hands

# Getting Hands-On with Katib. Here’s how you can get started with Katib:

1. Set Up Your Environment: Use a lightweight Kind cluster for local testing, but rest easy knowing you can scale up later. 2. Implement Your RAG Pipeline: Start with a retriever model and a generator model. Think Sentence Transformers for retrieval and GPT-2 for generating responses—these tools can significantly enhance your model’s performance. 3. Run Hyperparameter Tuning Experiments: Define your objective function to set what you want to optimize (like the BLEU score for text generation).

Katib will take care of the rest, running multiple experiments with different configurations. 4. Evaluate and Iterate: Use metrics to see how your models perform. The BLEU score will help you gauge the quality of the generated text—a critical component in ensuring your model is on point.

Final Thoughts

So, there you have it. Whether you’re looking to optimize your Spark workloads on Kubernetes or fine-tune your AI models with Katib, both the Kubeflow Spark Operator Toolkit and Katib are here to make your life easier. It’s all about improving efficiency, reducing latency, and boosting performance. And let’s be honest—these tools are not just for the tech-savvy; they’re designed to help anyone looking to get the most out of modern computing technology. So, roll up your sleeves, dive into these resources, and get ready to take your data operations to the next level. After all, in a world where data is king, you can’t afford to let inefficiencies reign.