Advancing Machine

Advancing Machine Learning Systems with TensorFlow and MLSysBook.ai.

The evolution of machine learning (ML) technologies demands a comprehensive approach that bridges cutting-edge model development with robust system engineering.

Recent advancements in TensorFlow 2.20 and the emergence of MLSysBook.ai underscore the critical need to integrate efficient tooling, infrastructure optimization, and system-level best practices into ML workflows.

This synthesis highlights how the latest TensorFlow release aligns with the principles of ML systems engineering, providing a roadmap for deploying scalable, high-performance ML applications. TensorFlow 2.20 marks a significant milestone by introducing key improvements that directly address performance and deployment challenges, particularly in ML systems engineering.

Notably, the deprecation of the tf.lite module in favor of LiteRT represents a strategic shift toward a more modular, hardware-accelerated inference framework.

LiteRT, launched as a standalone repository, enhances on-device machine learning by providing unified support for Neural Processing Units (NPUs), alongside GPU acceleration. This unification removes the complexity of vendor-specific compilers and drivers, enabling zero-copy hardware buffer usage that minimizes memory overhead and improves real-time inference throughput.

Developers targeting embedded or mobile AI applications are encouraged to migrate to LiteRT to leverage these benefits and stay current with ongoing innovations.

Beyond hardware acceleration, TensorFlow 2.20 optimizes the input data pipeline with the introduction of autotune.min_parallelism in tf, especially regarding ML systems engineering.data.

Options.

This feature reduces initial latency by allowing dataset operations such as map and batch to start asynchronously with a specified minimum parallelism level.

The result is a faster warm-up of input pipelines, which is crucial for applications that require low-latency inference or training startup times.

Additionally, TensorFlow’s modularization extends to cloud storage integration, making the tensorflow-io -gcs-filesystem package optional to streamline the core package and encourage explicit dependency management.

While this package currently offers limited support for newer Python versions, the move reflects a broader trend toward more flexible and maintainable ML ecosystems.

The Imperative of ML Systems Engineering.

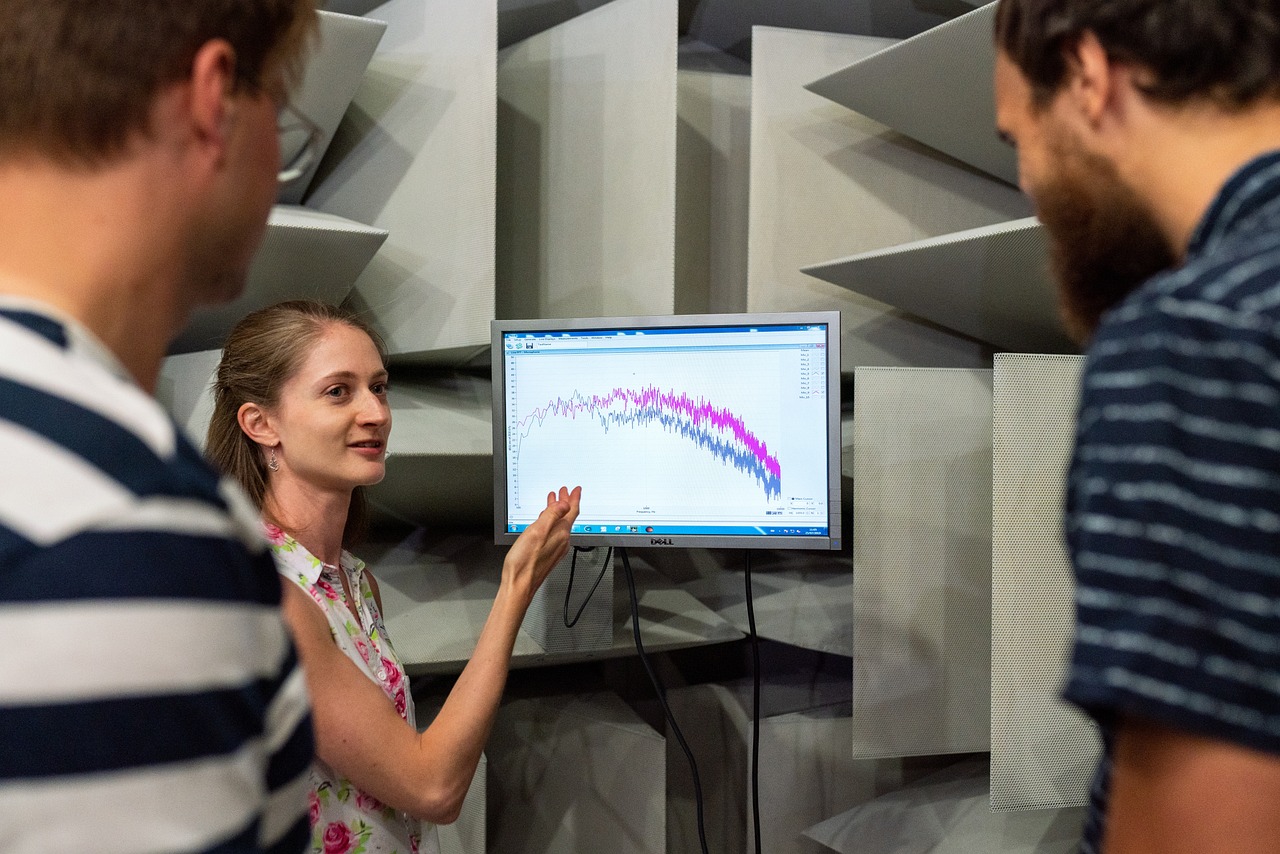

While advances in frameworks like TensorFlow provide powerful tools, the broader challenge lies in effective systems engineering—the discipline that ensures ML models operate efficiently and reliably within complex infrastructure.

As summarized by experts from Harvard University in their MLSysBook.ai initiative, ML systems engineering is the foundational work that transforms innovative models into deployable, scalable solutions.

ML practitioners often gravitate toward model development, drawn by the allure of novel architectures and theoretical breakthroughs.

However, without a deep understanding of the underlying systems—hardware constraints, data engineering, model optimization, deployment logistics—the performance and reliability of ML applications can suffer.

The analogy is clear: developing models is akin to astronauts exploring new frontiers, while ML systems engineers are the rocket scientists who design and build the engines that make these explorations feasible in the context of machine learning in the context of TensorFlow 2.20, particularly in ML systems engineering.

This perspective emphasizes that computational power, memory bandwidth, and deployment environments are not mere afterthoughts but integral to every phase of the ML lifecycle.

For instance, large language models (LLMs) exemplify the scale and complexity that demand careful system design.

Training such models requires distributed computing clusters and specialized accelerators like GPUs or TPUs, while deployment must balance latency, throughput, and cost. MLSysBook.ai addresses this knowledge gap by providing an open-source, comprehensive resource focused on the principles and practices of ML systems engineering, including machine learning applications, including TensorFlow 2.20 applications.

Originating from Harvard’s Tiny Machine Learning courses, the book offers a unified framework that spans diverse scales—from tiny embedded devices to massive data centers—highlighting that despite differences in scale and numeric precision (e.g., INT8 quantization vs. FP16 floating-point), the core engineering principles remain consistent.

Best Practices for Efficient ML Systems.

The integration of TensorFlow’s latest capabilities with the system-level insights from MLSysBook.ai creates a powerful synergy for ML practitioners aiming to optimize their workflows across the full ML lifecycle.

Key best practices emerge from this synthesis:

① Data Engineering: Efficient data handling forms the foundation of any ML pipeline. This involves preprocessing, augmentation, and management strategies that ensure datasets are clean, representative, and optimized for ingestion. TensorFlow’s tf.data API, enhanced by autotune features, exemplifies how pipelining can be improved to reduce latency and boost throughput.

② Model Development: While exploring novel architectures, practitioners must consider hardware constraints early, particularly in machine learning, including TensorFlow 2.20 applications, including ML systems engineering applications. Quantization-aware training and architecture search should be informed by deployment targets to balance accuracy and efficiency.

③ Optimization: Post-training techniques, including pruning, quantization, and hardware-specific tuning, are essential to maximize performance. LiteRT’s unified NPU interface exemplifies how hardware abstraction can facilitate optimization without sacrificing portability.

④ Deployment: Bringing models into production requires robust strategies for scaling, monitoring, and adapting to evolving workloads. TensorFlow’s move to modularize cloud storage support and offload on-device inference to LiteRT reflects a modular deployment mindset that enhances maintainability and scalability.

⑤ Monitoring and Maintenance: Continuous evaluation of model performance in production ensures reliability and identifies degradation or data drift, especially regarding machine learning, especially regarding TensorFlow 2.20, especially regarding ML systems engineering. This iterative feedback loop is critical to sustaining system health over time.

By embracing these principles and leveraging tools like TensorFlow 2.20 and MLSysBook.ai, ML teams can move beyond isolated model development to architect integrated systems that deliver consistent, efficient, and scalable outcomes.

As the field advances, prioritizing system-level thinking will distinguish successful ML projects capable of meeting evolving production demands.