Introduction

## Why AI’s Next Moves Will Blow Your Mind. Look, if you think AI’s just about slick chatbots or voice assistants reading off scripted answers, you’re missing the bigger picture. The real game is how these giant language models — you know, the ones with trillions of parameters — actually get their brains wired to *think* and *decide* like humans. We’re not just talking about throwing data at a black box anymore. This is about building AI systems that act like sharp, autonomous agents juggling complex info and making decisions on the fly. And the infrastructure that supports all this?

It’s evolving fast, too. So what’s really cooking behind the scenes?

Two big things: smarter AI workflows that handle info like a team of expert detectives, and next-level hardware setups that keep these massive models humming without blacking out the power grid. Let’s break it down.

How AI Learns to Think Like a Team

You’ve probably heard of Retrieval-Augmented Generation — RAG for short. It’s basically the foundation for feeding AI models with the right info *at the right time* so they don’t just hallucinate nonsense. The classic approach, what folks call “Native RAG, ” is pretty straightforward: take a user’s question, turn it into a fancy vector that the system can understand, search through a database of relevant documents, rank those documents by how useful they are, and then have the AI whip up a response from the best bits. That’s cool, right?

But here’s the kicker — that’s just the tip of the iceberg. Enter “Agentic RAG.” Instead of one pipeline doing all the work, Agentic RAG sets up a whole *team* of mini-agents, each assigned to their own chunk of info. Imagine having a squad where each member specializes in a different document, then a captain (called the Meta-Agent) who pulls all their insights together to make sense of the bigger picture. This setup isn’t just smarter — it’s downright *nimble*.

These agents act on their own, adjust their focus depending on what the question demands, even take proactive steps like pulling in extra info or running side tools. It’s like turning AI from a passive librarian into an active problem-solver who can compare documents, highlight contradictions, or even suggest the next move — all in real time. Companies dealing with piles of complex data — think compliance audits, research teams, or executives hunting for competitive intel — stand to gain huge. Instead of sifting through messes one doc at a time, Agentic RAG orchestrates multi-document reasoning with flair, freeing human brains for the tough calls instead of grunt work.

The Hardware That Makes It Happen

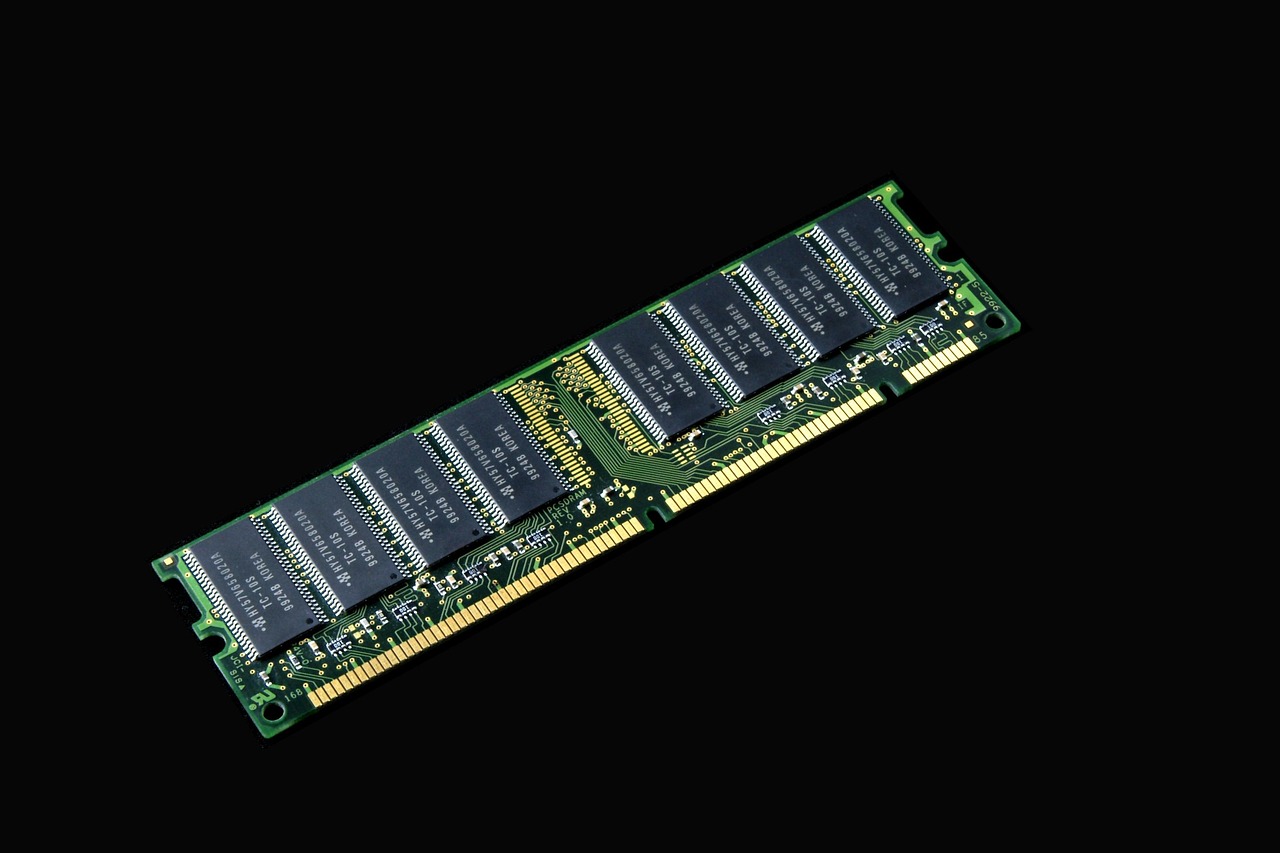

Alright, all this clever AI thinking is only half the story. You need the muscle to back it up, especially now that models like DeepSeek-R1 and LLaMA-4 are pushing into *trillion-parameter* territory. The problem?

These beasts need insane amounts of compute power, lightning-fast memory, and bandwidth you’d expect only at a NASA supercomputer. Huawei’s answer to this?

CloudMatrix — a fresh take on data center architecture that ditches the old-school hierarchical design for a full-on peer-to – peer network. Picture this: 384 NPUs (neural processing units) and 192 CPUs all wired up with a “Unified Bus” that lets them talk to each other directly, no bottlenecks, no waiting in line. This isn’t just tech flex. It solves real headaches, like handling the *sparse* activation patterns in mixture-of – experts (MoE) models, where only parts of the AI are “awake” at any moment. CloudMatrix lets resources like compute, memory, and network bandwidth scale independently and share freely, making it perfect for those huge context windows (we’re talking *millions* of tokens) and complex parallel tasks. The results speak volumes. Huawei’s CloudMatrix-Infer framework running on this hardware cruises at over 6, 600 tokens per second per NPU for prefill stages and nearly 2, 000 tokens per second during decoding — all with latency under 50 milliseconds. That’s faster and more efficient than some of the best NVIDIA setups out there. And they pulled this off while using INT8 quantization, which squeezes model size and speeds things up without hurting the output quality.

Why This Matters Right Now

Look, AI’s not some sci-fi dream anymore — it’s embedded in everything from how businesses make decisions to how your phone understands you. But if we don’t get the *right* systems in place, we’re just remixing yesterday’s tools. Native RAG pipelines are great, but they’re hitting a ceiling when it comes to deep reasoning and flexible workflows across tons of data. Agentic RAG pushes that boundary by turning AI into a collaborative, proactive agent that can really move the needle on complex tasks. And none of that happens without the guts to run these models efficiently. Huawei’s CloudMatrix shows us that traditional data centers won’t cut it as AI models keep ballooning in size and complexity. Peer-to – peer architectures with smart resource pooling are the future if we want AI that’s fast, scalable, and reliable. If you’re an enterprise leader or an AI practitioner, here’s what you need to keep an eye on:

1. Moving beyond static retrieval to agent-driven, multi-document coordination for richer insights. 2. Investing in infrastructure that supports massive models with huge context windows and MoE architectures. 3. Balancing efficiency with accuracy through smart quantization and hardware-software co-design.

Bottom Line

We’re standing at a crossroads where AI decision-making isn’t just automated — it’s *autonomous* and adaptable. The shift from Native to Agentic RAG is like moving from a car with one driver to a whole pit crew working in sync. And you can’t have that without a racetrack built for speed and flexibility, which is exactly what CloudMatrix delivers. So, what’s really going on here?

We’re watching AI evolve from a fancy search engine into a multi-agent system that thinks, plans, and acts — backed by hardware designed not just to keep up but to power the next generation of AI breakthroughs. If you want to be part of the future, this is the blueprint. And honestly, it’s pretty damn exciting to watch it unfold.