Introduction

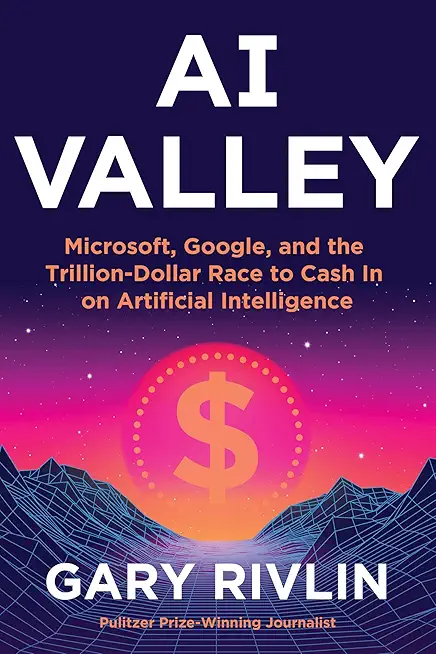

## Why AI Labs Are Changing the Game. Okay, here’s the deal—artificial intelligence isn’t just some new shiny toy anymore. It’s fast becoming the *electricity* of our time. You know, that kind of game-changer that flips everything upside down and forces us to rethink how we live and work. And if you think AI breakthroughs are just about building bigger or flashier models, think again. There’s a fresh, kinda old-school approach brewing that might just shake up how AI gets tamed and turned into everyday tools. You’ve probably heard of Jeremy Howard, co-founder of fast.ai and a guy who’s been pushing AI accessibility for years. Well, he’s teamed up with Eric Ries, the Lean Startup guru who’s obsessed with turning ideas into sustainable businesses, and together they launched something called Answer. AI. What’s wild about this?

They’re not chasing the holy grail of Artificial General Intelligence (AGI)—they’re focusing on the stuff right in front of us: making *existing* AI models actually useful for real people. Think of it like Thomas Edison’s original invention lab back in the day. Edison didn’t just mess around with light bulbs for the heck of it. He wanted to figure out how to harness electricity’s power in practical ways that could change lives. That’s exactly the vibe at Answer. AI. They’re combining hardcore research with a relentless focus on development—the “D” in R&D that often gets short-shrift in other labs. Here’s the kicker: they’re building a tiny, fully remote team of AI generalists. Not hundreds of specialists siloed off doing their own thing, but a handful of hungry, agile folks who know the foundations and can switch gears fast. Jeremy’s been there—he’s watched how a small, nimble group armed with the right tools can punch way above their weight. That’s what they’re betting on.

What’s The Real AI Problem

Look, the AI hype train has been barreling full speed for a minute now. With OpenAI, Anthropic, and others pushing boundaries, some experts are already saying “AGI is here.” But hold up—what does that mean for the rest of us?

Plenty of companies are still stuck figuring out how to practically apply these powerful models beyond shiny demos. Here’s where Answer. AI’s angle gets interesting: they’re diving into questions that big labs often ignore, like how to fine-tune smaller models to work better for everyday tasks or how to remove barriers that hold back wider AI adoption. It’s the collection of “small” problems that actually matter when you want AI to be more than just science fiction. They’re also serious about ethics and safety, not just the usual AI hype. Jeremy and his team have been teaching courses on practical data ethics for years, so they’re not about to ignore the real-world impact of algorithmic decision-making gone sideways. Answer. AI wants AI that’s not only powerful but aligned with what people actually need. ## Can AI Really Learn That Fast. Here’s something that’ll blow your mind—and make you question what we thought we knew about neural networks. A recent experiment with large language models (LLMs) on a tough science exam revealed they might be able to *memorize* new examples after seeing them just once. Yeah, you read that right. One shot. Boom. Memorized. This goes against decades of thinking that neural networks need tons of examples and training epochs before they really “get it.” If this pans out, it means we might have to rethink how we train and use AI models. Could it be that these LLMs are way more sample efficient than we gave them credit for?

If a model can learn quickly from just a few examples, that changes the whole game for fine-tuning AI and deploying it in niche applications. Imagine teaching an AI a new skill by showing it a handful of examples instead of feeding it mountains of data. That’s a huge leap. It could mean faster, cheaper AI development cycles and more personalized AI products. And if that’s not exciting, I don’t know what is.

Why The Old Ways Won’t Cut It

It’s tempting to think that the path to AI breakthroughs is a straight line—research first, then development. But Eric Ries, with his Lean Startup background, points out this is backwards. Development and research should *dance* together, constantly informing each other. Otherwise, you end up with research that’s brilliant but useless, or development that’s hacky and brittle. This approach is exactly why Answer. AI is so intriguing. They’re setting practical development goals first, then letting those goals shape their research direction. It’s a feedback loop that drives smarter innovation and real-world impact. Plus, with Eric’s experience running the Long-Term Stock Exchange, they’re building for the long haul—balancing profit with societal good, not just chasing the next quarter’s earnings report.

What’s Next For AI Then

Here’s the thing—nobody really knows where AI is headed, and that’s okay. Jeremy Howard quotes Michael Faraday’s famous uncertainty about electricity: sometimes you discover something huge but don’t yet know if it’s a “good thing” or a “weed.” That’s the reality of AI today. It’s messy, uncertain, and full of potential pitfalls and surprises. But with teams like Answer. AI, combining deep expertise, a focus on practical applications, ethical rigor, and a long-term mindset, the future looks promising. They’re embracing the uncertainty and ready to experiment, fail, learn, and build up from small wins. Kind of like the Edison labs of today, but for the AI age. So, next time you hear about some flashy AI breakthrough, ask yourself: is this actually going to change my life or just blow smoke?

Because the real revolution is happening quietly—by those who understand that AI’s power lies not just in the “big wow” but in the many small, practical steps that make AI work for *us*.

And that’s the kind of story worth following.